What Automation and Offshoring Tell us About The Labor Market Effects of Artificial Intelligence

Part One of Our Series: AI, Labor Markets and the University

Series Introduction

Universities and faculty are struggling to respond to the influx of generative AI tools and their impact on our research and our teaching. Should we articulate campus-wide AI policies or leave it to faculty? Should we ban AI, or teach it? Do we give up on take home essays and make them handwrite in class?

These are important questions. But they are less important than the question of how AI might reshape our students’ career opportunities and what that implies for the entire enterprise of higher education. We have put together a five (!!) part series to help our colleagues on faculty and in administration understand the underlying issues. Then they can get back to the question of how hard it is to decipher handwritten in-class essays…

Here is what you will read over the next five weeks. (And if you find our perspective helpful, please share with colleagues and invite them to subscribe!)

1. What Automation and Offshoring Tell us About The Labor Market Effects of Artificial Intelligence

We have decades of experience with the automation of farm and factory, and the offshoring of production to low wage countries. What can we learn from these historical analogues to AI about labor substitution, augmentation, and demand stimulation?

2. AI Adoption and Effects on Entry-level Hiring and Jobs: The Evidence So Far

It has been three-years since ChatGPT 3.5 exploded on the scene, and AI firms are making truly massive investments to capitalize. What do we know about AI adoption by firms, changes in entry-level jobs and hiring patterns, and the productivity of workers and firms who adopt AI?

3. How is Human Reasoning Different from Predictive AI Tools?

Will AI tools ultimately amount to souped-up calculators to which we offload difficult but menial cognitive chores, or will they act as autonomous “agents” or even superhuman intelligences capable of their own breakthroughs?

4: Experience and the Role of Academic Disciplines

We explore why AI can’t replace lived experience and understanding the causal analytical frameworks offered by academic disciplines, but makes these tools even more powerful for performance and advancing human knowledge.

5. Lessons for University Strategy

We don’t know where all of this will ultimately land, but universities can’t wait for some steady state to emerge. We pull our previous four posts together in a set of ideas for universities to consider as they develop their ‘AI strategy’ during this time of rapid change.

Let’s get started…

What Automation and Offshoring Tell us About The Labor Market Effects of Artificial Intelligence

While it is unclear precisely how artificial intelligence will affect the economy, much of the conversation has focused on the possibility that these AI tools will begin to replace large numbers of workers. This is not the first time we’ve heard such concerns – automation of work on the farm and in the factory, and the offshoring of manufacturing have led to similar replacement effects in decades past. What can we learn from these episodes?

In 1850, roughly 2/3 of the US workforce was employed in agricultural production. But then, better tools, mechanization, and improvements in irrigation, seed, fertilizers, and pesticides sharply increased farm yields. Between 1950 and 2021, farm productivity improved by a factor of 3, and the labor employed in agriculture fell dramatically, reaching just 1.2 percent of the US workforce.

Starting in the 1980s manufacturing firms began to substitute industrial robots for labor, and beginning around 2000, offshoring of production accelerated that trend as companies moved manufacturing to other countries. Even though US manufacturing output rose 80 percent, labor’s share of manufacturing value-added fell from 68 to 52 percent, and the number of workers employed in US manufacturing fell from 19.5 million in 1979 (20% of the workforce) to 12.8 million (8% of the workforce) today.

Labor Effects: Substitutes, Complements, and Demand Stimulation

These stories seem straightforward – automation and offshoring take jobs, and we might expect AI will do the same…only for white collar workers this time. But the overall trends noted above mask three competing effects. The first is labor substitution. When firms and farms substitute more efficient capital for labor, they reduce the amount of labor needed for each bushel of wheat or car produced.

The second effect is labor complementarity. Some technologies augment the productivity of certain kinds of labor. Factory automation replaced labor used in assembly but created demand for more educated workers who design and implement automated systems. Computers replaced typists and manual bookkeepers, but created demand for anyone who could design or program computers, or even just use the power of computers to accelerate or improve the quality their own work. Offshoring allowed foreign workers to replace US workers with low education levels, but augmented demand for US workers with high education levels.

The third effect is demand stimulation. In agriculture, productivity growth has lowered the price of agricultural output by 50 percent since the 1950s. The (quality adjusted) price of consumer electronics has fallen 95 percent since 1997. Lower prices lead consumers to buy more of the product, and can actually increase the total amount of labor employed. (For example, suppose automation enables us to reduce labor employed in making a car from 100 hours to 80, but car sales double from 50 to 100. Total labor employed goes from 5000 hours to 8000 hours.)

The demand stimulation effect need not work only on prices for a fixed set of products. Technological change and offshoring also lead firms to improve the quality of products, offering better features or performance, or creating entirely new items. These quality and product variety effects can stimulate demand more dramatically than simply making something cheaper.

Labor substitution is the main focus of AI-commentators. They ask: how many jobs will be lost as AI performs work now done by people? They should ask two more questions. Which types of labor will AI augment, and how much will AI-enabled firms and industries stimulate demand if prices drop or new products are put on offer?

The labor substitution/labor augmentation effects are really interesting because they get at some fundamental questions. Like, what are humans capable of that AI is not? And, what would make it difficult for firms to engage in wholesale adoption of AI to replace large chunks of their labor force? And what the heck should universities and their students do about all this? But first, let’s talk about the historical evidence on demand stimulation.

Historical Evidence on Demand Stimulation

Demand stimulation asks: how responsive is consumer demand to changes in prices, product quality and new variety? Let’s focus on demand for a simple product like wheat, or a pair of shoes. If only some firms within an industry adopt a new technology that lowers labor costs for those firms, employment will paradoxically increase in those firms and decrease in competitors. But an industry-wide shift toward labor saving technology is likely to reduce overall employment in that industry.[1]

In the case of agriculture, people responded to falling prices by eating more, eating differently (more animal protein), and using agricultural products for industrial applications (biofuels). But despite our best efforts and growing waistlines, we didn’t eat enough or find enough industrial markets to make up for tripled farm productivity. Labor employed in agriculture fell dramatically.

As for footwear, here’s a fascinating graphic from the Washington Post, which shows what happened with employment in shoe making. First, a wave of factory mechanization replaced traditional cobblers, then offshoring moved that shoe production overseas. We’ve all got a lot more shoes in our closet than people had in 1850… but not enough to employ 2.75% of the US workforce. (That would be 4.5 million shoemakers today…)

Source: Washington Post.

But focusing on wheat, or shoes, misses the most interesting parts of what happens in the modern economy. Increasingly we find that technological change and more efficient production enables an explosion of new products and services we didn’t even know we wanted. In 1943, the Chairman of IBM, Thomas Watson, offered the hysterically inapt prediction, “I think there is a world market for maybe five computers.” Let’s think about just one company.

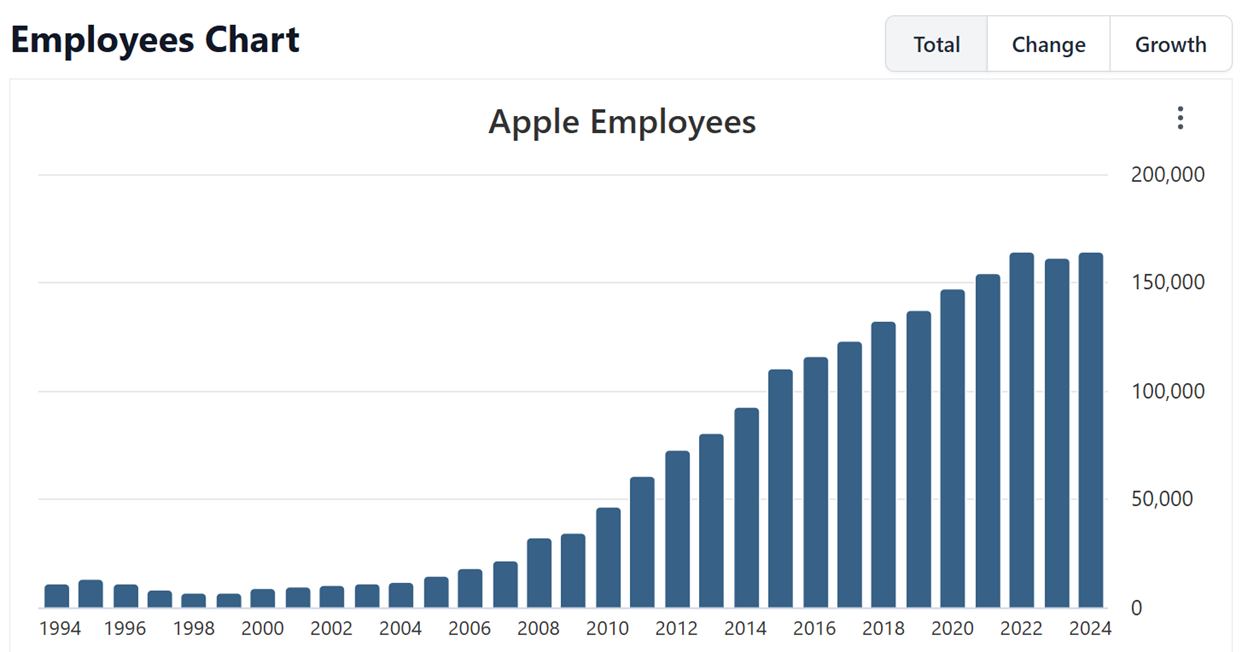

Apple began life in 1976 with Steves Jobs and Wozniak soldering motherboards in their garage. By 1998, Apple employed 6658 people and had a market capitalization of around $2 billion. Today it is worth 2000 times that amount, just under $4 trillion, directly employs 164,000 people worldwide, contracts with many more through foreign suppliers, and claims to “support” another 2 million US jobs through suppliers and app store creators. That is demand stimulation on steroids.

What happened? Apple’s employment growth really takes off in 2007, with the launch of the iPhone. iPhone sales now directly generate more than half of Apple’s revenues, and if you include all the ancillary services and devices that iPhones generate and support (think: App store sales, Apple Watch), it’s more like 80%.

Source: https://stockanalysis.com/stocks/aapl/employees/

Its notable that Apple doesn’t directly manufacture any of that stuff in the US. That explosive growth corresponds to Apple’s ability to combine its technological design and marketing prowess with offshoring high-quality manufacturing to low cost Asian countries. This allowed them to hit a price point with their iPhone that made phone ownership possible for a very large market! But it also facilitated a massive expansion of auxiliary applications for services we didn’t even know we needed.

The larger point is that its simply wrong to think about production as a zero-sum contest between capital and labor, or between labor in the US versus labor in Asia, or between labor provided by people versus “labor” provided by AI agents for a fixed set of goods. The creatively destructive powers of technological change are most evident, not when they are lowering costs, but when they are expanding the set of what we can do and what we make available for people to consume.

Disaggregating Tasks

In many ways, incorporating AI into the workforce is much closer to offshoring production than it is to mechanizing agriculture or automating factory floors. We are asking a machine intelligence that we do not fully understand or control to handle for us a subset of tasks that we used to perform ourselves. Let’s build out the analogy and draw on decades of experience in offshoring production to provide some useful insights.

Modern production involves many distinct stages (aka tasks). For manufacturing that means R&D, product design and testing, parts and components manufacture, assembly, and marketing and distribution, all undergirded by a set of “back-office” services including IT, HR, and finance. Service industries have similar stages, but without the physical artifacts of goods or their subcomponents.

Because each of these stages is produced using different inputs (R&D needs scientists and engineers; assembly needs manual labor), firms can reduce expenses by moving production of some stages/tasks to locations that provide those inputs less expensively. Back-office IT services get offshored to India. Manufacturing assembly and then later most of the manufacturing value chain are offshored to China or other locations around Asia. Each time this happens the labor share of output for the offshoring firm falls, but costs also fall and as a result, sales increase. The net effect is often to increase employment in that firm by stimulating demand.

What Caused Offshoring to Explode?

Not all firms and not all processes are amenable to offshoring. And even though the logic of international specialization based on stages of production has always made sense, offshoring didn’t really take off until the advent of significant changes in the technological and policy environment from the mid-1990s onward.

Keep in mind that when a firm separates production across locations, it creates a more complicated workflow. Even if a foreign location can produce at lower cost, information and physical products have to flow from one location to another. (And repeatedly! The North American automobile value chain involves parts and components crossing international boundaries 7-8 times.) The explosion in offshoring didn’t happen until there were sharp reductions in the costs of moving goods and information across borders and oceans.

Transportation costs fell due to containerization of ocean cargo and jet engines, and airplanes bridged both distance and time with profound implications for reorganized production. Political barriers to segmenting production fell, including lower tariffs, and heightened protections for intellectual property and foreign investment. Information technology made it possible to directly offshore back-office services, while also providing a dramatically improved flow of information across segmented production locations. Firms could communicate design specifications, track the flow of inputs, provide instantaneous information about defects or stockouts.

Verification, that is, ensuring the quality and reliability of foreign production is critical in this process. It’s no bargain to purchase low cost inputs from abroad if they arrive late, or in the wrong amounts, or suffering quality defects. Planned cost savings ultimately lower profits if quality or delivery problems shut down a production line or lose loyal customers.

Lessons for AI in the Workforce

What can we learn from the offshoring experiment about the use of AI in the workforce?

First, think in terms of tasks, not in terms of overall employment or even integrated occupations as they have been traditionally defined. Apple doesn’t think success means owning every stage of an iPhone’s creation. It grew 2000 times more valuable when it stopped trying to do everything; it focuses on design, software, marketing, and distribution and lets Asian suppliers handle the manufacturing.

We can reframe work by asking: how does a worker divide their day or week across many distinct tasks? You might try this exercise. Get out some paper and write down every distinct thing you do during the day, and how much time you spend doing it. Then at the end of the day, revisit that, and add all the things you forgot. If you’re like most people, you don’t do one thing. You do 50.

Second, of all the tasks you do, which of these many tasks might be subject to automation or augmentation by AI tools? This is a deeply interesting topic so we will tackle it at length in the next three essays. The really short answer is that AI tools are “only” good at prediction. But what we’re starting to learn is how many things can be predicted – not just the weather, but words – and how powerful that might be.

Third, individual tasks are not valuable by themselves, any more than Apple’s design or manufacturing is valuable in isolation. It’s the efficient combination of tasks to deliver a final product or service that is valuable to firms. Even though these AI tools appear miraculous at times, their use-cases are often much narrower than the ways we traditionally define someone’s work day.

As an example, OpenAI has created a new set of benchmarks aimed at measuring its ability to perform “real world” tasks that we might require of various occupations. What struck me in reading this was how narrow the task set was, how little of a person’s day might be absorbed in doing the tasks OpenAI wanted to automate for you. In our next essay we will provide some evidence on just how much people are using generative AI tools currently and how much time it saves them. Answer: not that much. Are you really going to lay someone off because a tool can do 5% of their job for them?

Fourth, separating and then later recombining distinct tasks is not trivial. Offshoring of production didn’t take off until it became much cheaper and easier for information and goods to flow across borders. Even if AI can automate or augment particular tasks, what is the workflow between those tasks and what are the barriers to their re-integration if we offload some but not all of these tasks to AI tools?

Barriers to Integrating AI Tools into the Workflow

The transformer architecture that powers modern generative AI tools predated by 5 years the explosive launch of ChatGPT in late 2022. But it was the chatbot interface that made the tool useful to many by lowering the costs of interacting with it. As we go deeper into integration with AI tools, there are several barriers to interaction and re-integration that remain significant and perhaps insurmountable: regulation, verification, and security.

As US firms are currently learning, the best offshoring plan will fall apart if government regulation and taxation intervenes. The big AI integration risks here are: copyright; government regulation of privacy and confidentiality, particularly around medical, financial, and personnel data, but also related to child safety; and regulation of physical objects such as driverless cars that embody AI-use.

Verification and minimizing defects are a major problem with generative AI. Modern quality control in manufacturing only tolerates exceptionally rare minor defects: a “six-sigma” standard implies tolerating something like 3-4 defects out of every one million parts. Without it, offshoring production to suppliers who operate at a distance and with long lead times would be infeasible.

The hallucination rate for the best-performing generative AI on the easiest problems is something like 1000 times worse than that. Many experts (including evidently OpenAI’s scientists…) think hallucination is fundamentally unfixable and inherent to the architecture. That has financial consequences for adopting firms. And becomes a source of great embarrassment and possibly legal sanctions for other professionals such as attorneys, and even judges. Worse, the more we hand complex and detailed work to AI agents to perform, the less we know about the work it is doing, and the harder it is for us to detect these defects.

Finally, a potential solution to the task handoff problem is to allow AI agent to integrate more deeply with our workflow and act with greater autonomy. If you check every step of their work, you have lost all of the time savings you had hoped for (and possibly more). But allowing autonomy means they are doing things you don’t understand and you don’t control, and that may have extreme security vulnerabilities. (Go read this 2023 NIST report on adversarial machine learning, or this update on AI attack vectors and be very afraid.)

Summary… And What’s Coming Next Week

My hypothesis is that in the near term most firms will struggle to significantly reduce labor inputs, even in cases where AI tools are demonstrably better and cheaper at some tasks. The reason is that too much of workers’ task sets are not amenable to AI disruption, and the handoffs between tasks sufficiently difficult to navigate, that they will offset significant savings. Fully exploiting AI tools means completely rethinking task sets and workflows.

There may be some exceptions to this. Not all jobs are complex, some consist of doing the same “routine” task over and over. As it happens, those are precisely the jobs that were offshored over the last few decades. And likely the ones that will disappear in an AI-enabled firm.

The more powerful and interesting case is that augmenting labor with AI tools will lead to the creation of entirely new products and services, which not only create value for firms, it causes the most successful among them to actually expand employment.

These predictions are based on historical analogies. But things are moving fast! So what IS going on currently with AI adoption and labor substitution/augmentation? Importantly for universities, how is it impacting entry-level hiring and the work world new graduates are moving into? Jay digs into those questions next week.

Finding Equilibrium” is coauthored by Jay Akridge, Professor of Agricultural Economics, Trustee Chair in Teaching and Learning Excellence, and Provost Emeritus at Purdue University and David Hummels, Distinguished Professor of Economics and Dean Emeritus at the Daniels School of Business at Purdue.

[1] Demand for specific firms within most industries tends to be highly responsive to changes in prices, whereas demand for an industry as a whole tends to be much less responsive. For example, suppose we’ve got two grocery stores in town, Kroger and Meijer, and Kroger cuts its prices by 10%. Kroger would likely attract so many customers from its rival that its revenues would increase, while Meijer’s revenues decreased. That is a within-industry reallocation. But if both stores cut their prices by 10%, people would use some of that savings for other things (eating in restaurants, buying clothes or electronics) and total grocery revenues for the two stores would likely fall. Related, the research literature shows clearly that when a specific firm offshores production more than a typical firm in that industry, its productivity rises and sales increase relative to those other firms. The reason is that most firms face, within their industry, elastic demand for their products – quantities increase faster than prices drop. But that won’t happen if *every* firm in that industry drops prices by the same amount.

AI is gona reshape industries that rely on temp and contract workers. Companies like ManpowerGroup wil need to pivot from just placing people into jobs to also traing them for AI-adjacent roles.